L2G2G: A Scalable Local-to-Global Network Embedding with Graph Autoencoders

Nov 28, 2023· ,,,,·

1 min read

,,,,·

1 min read

RuiKang OuYang

Andrew Elliott

Stratis Limnios

Mihai Cucuringu

Gesine Reinert

Publication

In International Conference on Complex Networks and Their Applications

- Scalable, stable and efficient unsupervised pretraining is a foundamental problem in machine learning.

- Graph-structured data is prevalent today in various domains, such as social networks, web graphs, and protein-protein interaction networks, often scaling to millions of nodes and edges.

- Unsupervised pretraining for large scale graphs can be inefficient and unstable.

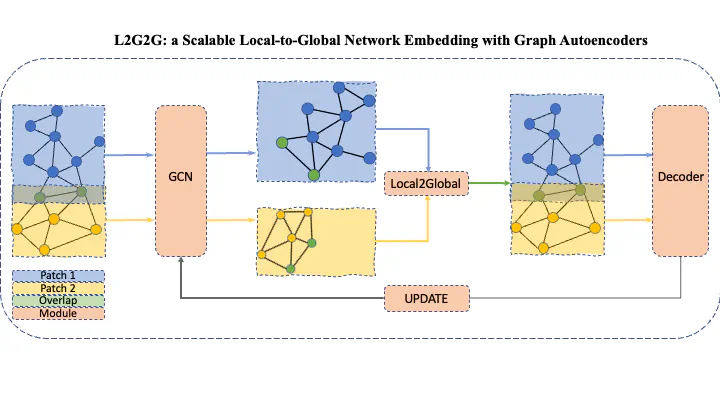

In this work, we propose Local2GAE2Global, a parallelable and distributable framework relies on dynamical latent synchronization:

- A large-scale graph is first divided into patches;

- encoding machine, e.g. Graph Autoencoder (shared weight), then computes the local embedding for each node in each patch parellely;

- The local2global algorithm is applied to synchronize those local embeddings to global ones;

- Finally, a non-learnable decoder, i.e. inner product, decodes the global embeddings which are used to compute a reconstruction loss as usual for neural net optimization.

Additionaly, the nature of patch training enables its direct application to Federated Learning, e.g. health networks and financial transection networks, which is an open direction in the future.